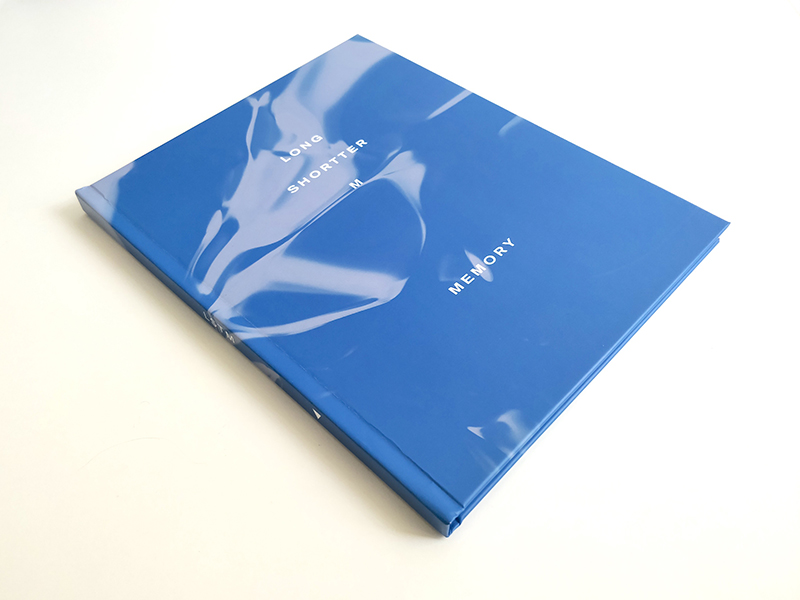

| Title | Long Short Term Memory (2017) |

| Media | Hardcover Book (92 pp, 8 x 10 in, Edition of 5) |

| Cat No. | STDIO-028 |

| Image | |

| Info | Long Short Term Memory (2017) comprises texts and images produced by artificial neural nets imbued with memory, exploring architectures for forgetting within the realm of machine learning. A recurrent neural net (RNN) trained on a corpus of contemporary rationalist philosophy and computational theory makes its own synthetic claims. Devoid of grammatical axioms, language is but a stream of characters to the RNN. Ingesting the work of Luciana Parisi, François Laruelle, Quentin Meillasoux, and others, the net offers its own novel speculations. Alongside the text, the latent space of an LSTM network is hijacked with abstract inputs, revealing formal aspects of its architecture in a series of black & white exposures. These depict a complex of smooth activation functions arranged in such a way as to decay information during the process of learning. The publication was made available online by the Glass Bead Journal and is in the ZKM collection. |

| Format | |

| License | © All Rights Reserved |

| Download | (.zip, 28MB) |