Decrypting the Event

Notes on Seminar 4 of Computation as Capital @ The New Centre

March 2015

“Between the secret and communication there only exist determinative relationships that are unilateral, asymmetrical, or irreversible.”

- François Laruelle, ‘The Truth According To hermes: Theorems on the Secret And Communication’

The contemporary concern with tactics of opacity stems from a networked gaze of panoptic fibre now global in reach. Laruelle provides us with a set of conceptual procedures attuned to the task of elaborating a cryptography of being, via a non-performative mode of inquiry that goes beyond negation. This challenge to relational thought should be brought to bear on computation itself, signalling an exit from the bind of total addressability.

What does it mean to encrypt? Encryption inscribes the irreversible into computation, activating a mathematical asymmetry performed by way of a cipher. The irreversible function implies a unilateral determination with the evental quality of a decision. As a calculation that admits no reflection, the encrypted hash contains a claim to radical immanence.

Encryption suggests itself as an informational correlate to the Laruellian secret, an uninterpretable kernel which does not give itself to appearance – there is no hermeneutics of the One, just as the encrypted confers no meaning – but on closer inspection this resemblance is merely a surface effect.

If the digital is the “division of the one into two” (Galloway), the notion of a dark crypt of data seems incompatible with the immanence of the Laruellian object, hindered as it is by its fundamental relationality. A datum, from the Latin, is always ‘something given’, data cannot but appear.

The dyadic mode of the digital seems to preclude pure immanence. Laruelle considers it “doubtful” that philosophy’s “auto-speculative kernel” is reducible “to numerical combination”, the secret is “numerically invulnerable”. He outright rejects a dichotomy of thought and computing, just as he dismisses a cognitivist conception of philosophy. Even though artificial intelligence may surpass human capacities, it won’t do so in terms of an analogous performance.

For Laruelle, philosophy is that which effectuates for the first time, a “radical commencement” which is not equivalent to a “performance” of consciousness. Non-philosophy “radicalizes” this irreducibility as it foregoes the decision altogether, avoids performance as such — it remains defiantly incomputable.

Similarly Negarestani, in his call for a revisionary humanism, highlights the epistemic limits of the algorithm by characterising human rationality in terms of a non-monotonic logic engaged in abductive reason, a sapience beyond the scope of algorithmic modes of inference. The distinction with Laruelle is in a commitment to the inhuman as the basis for a constructive “vector of reason” in the face of an artificial general intelligence.

At stake in tracing an artificially computable determination – the inhuman capacity for an irreversible decision or spontaneous event – is an elaboration of a politics and ethics of the inhuman, as part of a wider project of inhumanism. Pure immanence in this regime amounts not simply to a withdrawal, negation or refusal to compute, but to a complete abstention from the problem of calculation as such.

The digital is governed by what we might call the principle of sufficient metadata – that all data necessarily entails the existence of metadata which reflects upon it. This follows from the definition of digital data as simultaneously addressable and quantifiable, as its two intrinsic properties – the fact that data “must conjoin givenness and relation” (Galloway).

Therein lies the limit of cryptography as a claim to pure immanence in the digital – there will always exist metadata, itself a form of data, precipitating a recursive relation of unending reflection that both locates (addresses) and measures (quantifies) the secret. Even as the encrypted declares itself uninterpretable, it does so within a regime of cascading relation, set within a broader binarism.

The asymmetry that allows an individual to encrypt a message such that the entire computational power of the NSA will toil to decrypt it is undermined by the principle of sufficient metadata, which admits metadata as a necessary condition, revealing the secret on a different scale of abstraction.

Likewise a dark web possesses no pure immanence, there is no end to the depths of metadata, no matter how far you obfuscate up the stack. There is always another layer to the onion skin of relation – an IP address exposing an entry to the gates of the hidden realm – a residual is always to be found.

The digital regime is a domain rooted in difference, in the primary distinction enacted by the binary, the cleaving of the one into two. Any attempt at withdrawal is reassimilated, leaving us with a tyranny of relation — the addressability of any gesture.

There can be no evental site (Badiou) in digital computation, as there can be no excluded part due to the principle of sufficient metadata. A computer may produce unpredictable results, but these are grounded by determinate functions, they are calculable. Indeterminacy may be artificially seeded via an external source of entropy, but to locate an immanent undecidability in computing, one that mirrors Badiou’s incalculable event, one has to trace computation to its limit state.

Parisi’s claim of a speculative computing aims to fold the halting probability, Omega (Ω) – a computably enumerable sequence that is nevertheless infinite and algorithmically random – into the “core” of computation. It seeks to transform the limit of a system into its intrinsic property, to render a threshold into a constitutive condition. This movement, proceeding via Whitehead, admits indeterminacy into the heart of computation, but only at the cost of a coherent definition of what it is to compute.

Randomness within computation is only ever encountered as a limit condition, just as the probabilities embodied by Ω are only enumerable in infinite time. At these thresholds computing collapses into indeterminacy. To state that “the immanence of infinities” (Parisi) present as “random actualities” (Ibid) in incomputable algorithms is in some sense constitutive of “programming cultures” (Ibid) is a provocative but hyperbolic claim.

We might consider instead an indeterminacy present in the very definition of computation, in the form of the undecidability of the halting problem, as a symptom of an incomplete axiomatics. Computing suffers from an indeterminate relation to time.

The infinite probabilities of Ω cast a virtual shadow over computation, marking out the boundaries of its determinacy, unactualised but nevertheless present as real objects with real effects. The figure of the incomputable is immanent to the regime, necessitated by the incompleteness of computation, but cannot simply be reduced to an “intrinsic randomness” (Ibid) embedded in the algorithm.

Ultimately the irreversible cipher that encrypts, while possessing the determinacy of a decision and producing the qualities of a secret, fails to attain the status of either radical immanence or evental site by virtue of its grounding in the digital. The age old dualism of the discrete (quanta) and the continuous (continua) appears intact. But we may look to the root of quanta, its very constitution, for an exit.

Quantum computing (Deutsch) presents a flight from this dualism, the promise of a computing faithful to the indeterminacy of physical matter. It suggests the capacity for the incalculable event to emerge as a spontaneous decision in computation. Quantum cryptography contains the potential to constitute a Laruellian secret, to apriorize the a priori.

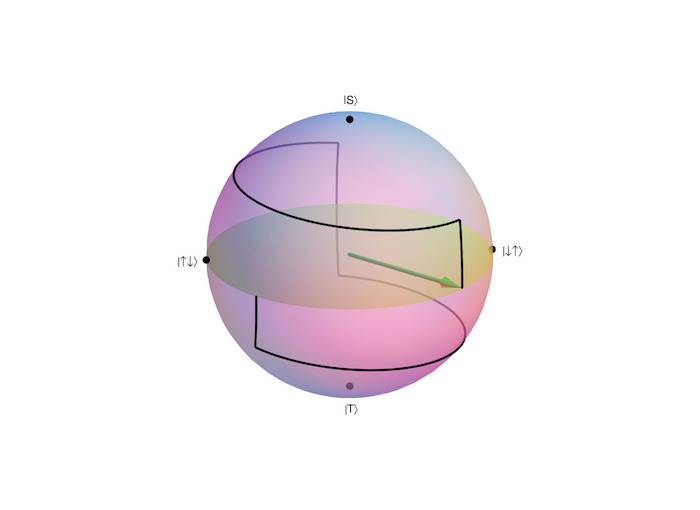

Quantum information theory is the domain in which a non-computation could be effectuated as correlate to Laruelle’s method. It’s unit, the qubit, lays claim to being an uninterpretable kernel – an unreadable, indivisible form that can nevertheless be transported (entanglement) and computed (quantum logic gates).

The quantum bit is a continuously valued superposition of states, it is of the domain of continua rather than the digital, or more precisely, a figment of the “rupture of the discontinuous” (Barad), the unraveling of the duality. At no point is it both fully addressable and quantifiable, as stated by the uncertainty principle. It never reveals itself, admits no reflection – measurement precipitates its collapse – and only comes into relation during quantum computation. As such only its results (calculations) are apparent. Encryption in this scheme produces secrets that cannot be preserved during observation.

Laruelle asserts the need for “a theory of the event that leans towards quantum theory by way of the generic”. The qubit could be the foundation for both the theory of a computable event (as spontaneous decision) and a speculative theory of non-computation, suggesting a kernel possessing a radical immanence located within the realm of computation, a secret that evades calculability, present at the origin of the discrete.

Image: Bloch Sphere depicting the manipulation of a qubit (Credit: Xin Wang)

Sources

- Alain Badiou, 2013, ‘Logics of Worlds: Being and Event II’, Bloomsbury Academic

- Karen Barad, 2014, ‘Re-membering the Future, Re(con)figuring the Past’, Duke University Keynote

- David Deutsch, 1985, ‘The Church-Turing principle and the universal quantum computer’, The Royal Society of London A 400, pp. 97-117

- Alexander R. Galloway, 2014, ‘Laruelle: Against the Digital’, University of Minesota Press

- François Laruelle, 2013, The Transcendental Computer: A Non-Philosophical Utopia, translated by Taylor Adkins & Chris Eby

- François Laruelle, 2010, ‘The Truth According To hermes: Theorems on the Secret And Communication’, Parrhesia, Number 9, 18-22

- Reza Negarestani, 2014, Labour of the Inhuman, e-flux

- Reza Negarestani, 2014, Intelligence and Spirit, Urbanomic

- Luciana Parisi, 2013, Contagious Architecture (p14-21), MIT Press